Interview on AI in software development: “I’m never letting go of Copilot’s AI agent again!”

We spoke with our senior developer Bernhard Reuberger about how our team uses artificial intelligence in developing autoscan and autosign.

Question: In our last conversation about AI at the end of 2023 you were mostly working directly with ChatGPT – what has changed?

Bernhard: GitHub Copilot has taken a huge leap forward, especially in the last few weeks. Back in late 2023 Copilot was still pretty annoying when coding. But a few weeks ago the AI agent in GitHub Copilot went into preview. And what can I say – I’m never giving it up!

What makes GitHub Copilot’s AI agent so special?

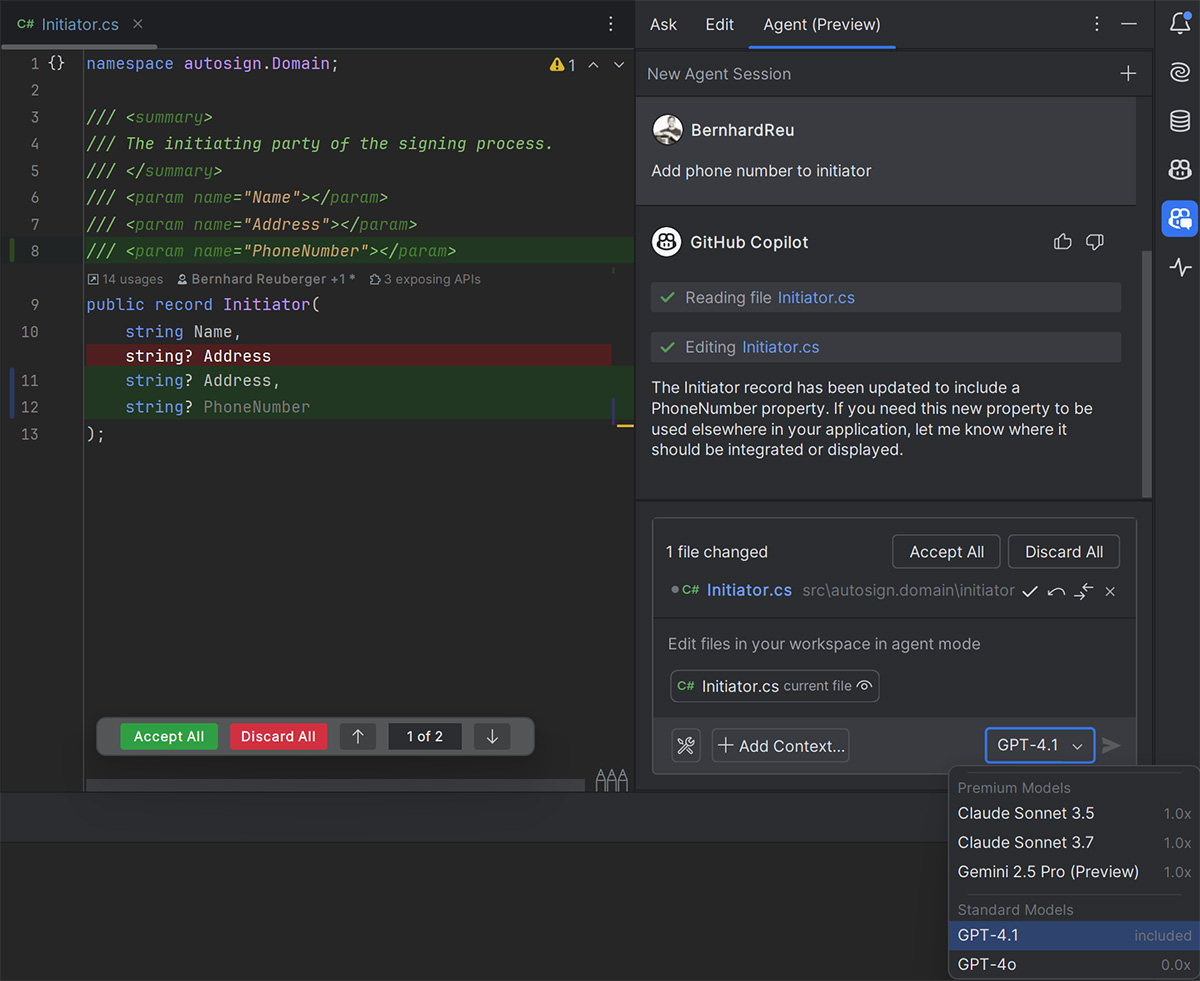

In general I can choose which AI model to base the current task on, for example ChatGPT from OpenAI or Claude from Anthropic. That’s great because the models have different strengths and weaknesses.

The agent can also do things that didn’t work before: it understands the context I’m working in much better. It can reference files – or even find them on its own when you mention certain words, classes, and so on. It will create new files itself and decide where they belong. And it does all of this far faster than I could – what takes me 15 minutes it finishes in 30 seconds.

It turns out AI-generated code is usually “only” about 50-70 percent correct. But that’s a great starting point and already saves a lot of time.

That sounds terrific: you tell the AI agent what to do and just watch it work.

(laughs) Well, it’s not quite that simple. First, I have to think carefully about what I tell it. Prompt quality is critical and, in my view, the reason opinions on the new AI agent vary so much. A prompt can’t be too broad or too narrow and has to be as clear as possible.

And the code the AI agent spits out rarely fits 100 percent. I would never ship that code straight into production for autoscan or autosign. Our production-ready code has to be stable, secure, tested, and maintainable. For that we need to understand every single generated line. It turns out AI-generated code is usually “only” about 50-70 percent correct. But that’s a great starting point and already saves a lot of time.

Which tasks is the AI agent particularly good at?

Anything with recurring patterns for which plenty of examples and established solutions exist. I love having the AI write our tests – in those cases the result is often spot-on, especially when similar tests already exist. I also can’t remember the last time I wrote a PowerShell script entirely by myself. The AI is fantastic for prototyping and proofs of concept, where code perfection isn’t critical.

What doesn’t work as well are big-picture architecture questions or technology and concept choices. There it pays to try different models; my favorites are ChatGPT or Grok. In general I like to switch back and forth to see what the latest version of each model can do – that changes constantly! By the way, in GitHub I sometimes just swap the model when I don’t like an answer and let a different one tackle the prompt again.

How has the workday changed?

One interesting shift is in workflow: as a developer you’re normally in a tunnel while coding – just typing and typing. Now I write a prompt, watch the AI think, and have to keep myself from mentally drifting off. I use the time to think through next steps, such as needed tests or documentation. That prevents a context switch that can really yank you out of focus. When the agent finishes, I can simply accept or reject each individual change.

I’ll go out on a limb and say humans will be calling the final shots for a long time – after all, it’s GitHub Copilot, not GitHub Pilot.

How long will AI still need humans in software development?

I’ll go out on a limb and say humans will be calling the final shots for a long time – after all, it’s GitHub Copilot, not GitHub Pilot. Despite the challenges AI brings, I see it as an immensely powerful tool. AI can amplify, even multiply, our own abilities – making us better and faster.

How important has AI become for the AUTOSCAN team?

It makes us better as a team. We started exploring it early to remove any hesitation right from the start and to learn this new tool quickly. By now AI is an integral part of our software development. Thanks to AI tools you can roughly multiply the output of our three developers by about 1.2.

Besides development, in which other areas do you see meaningful potential for AI? Any examples?

Absolutely! In general, we’ve begun asking in every non-development activity whether AI could help. One example would be an internal AI bot that helps us with support tickets. It could know our product history – every feature and bug fix – plus all our documentation. That would save a lot of research that’s sometimes needed to solve more complex support cases. Within the products autoscan and autosign themself, though, we only want to use AI where it truly makes sense, not just for its own sake!